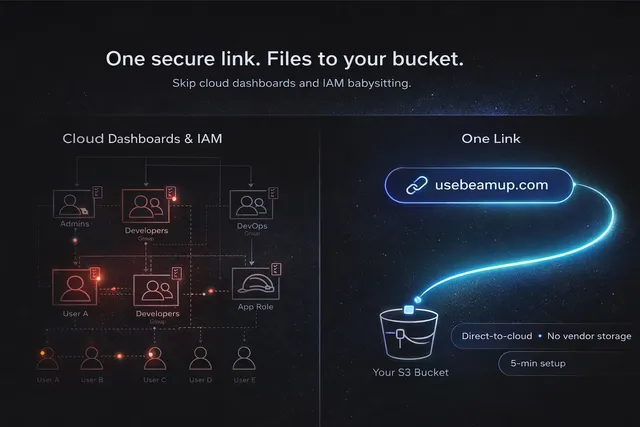

Stop the Download-Re-Upload Dance: A Friction-Free Upload Portal for S3

Skip double-handling. Learn how an S3 upload portal lets partners drag-and-drop large files directly into your bucket-fast, secure, zero third-party storage with BeamUp

Published

Ever watched a 20 GB set of raw video rushes crawl down from a client’s Dropbox - only to push the very same bits back up to Amazon S3 so your editors can start cutting? That download → re-upload shuffle burns half a day and doubles your bandwidth bill for absolutely no business value.

I’ve been that infra-owner, staring at progress bars and wondering why “cloud” sometimes feels like dial-up. Fortunately, there’s a better pattern: an upload portal S3 workflow that lets partners drag-and-drop straight into your bucket. No middleman. No duplicates. No headaches.

Why Double-Handling Hurts More Than You Think

- Time tax - Each 20 GB file you handle twice costs 40 GB of bandwidth and hours of idle hands.

- Money leak - You’re paying for two storage surfaces - Dropbox or Google Drive seats as staging plus S3 PUT + storage at the end. Even if consumer clouds don’t charge per-GB egress, the duplicate footprint shows up in license counts and local cache space.

- Security risk - Extra hops create copies that must be deleted, tracked, and explained to ISO auditors.

- Human friction - When files live in two or three places, teammates end up Slacking “Which copy is the latest?” Send every upload straight to S3 and those version-chasing pings disappear.

What Most Teams Try First (and Why It Breaks)

| Work-around | Where It Helps | Where It Fails |

|---|---|---|

| FTP / SFTP | Familiar to vendors | Single-thread uploads, firewall gymnastics, no cloud metadata |

| Dropbox File Requests | Friendly UX | Extra hop, seat cost, daily bandwidth caps |

| Google Drive Upload Links | Free egress | Same duplicate storage, quota juggling |

| MFT Gateways | Compliance features | Third-party storage; still pay to pull into AWS |

| Temporary IAM Users | Direct-to-S3 | Who’s rotating those keys-and revoking them later? |

After enough band-aids you realise the real cure is direct-to-S3 upload. But rolling that yourself is no picnic.

DIY Direct-to-S3: Technically Possible, Practically Painful

I’ve built more presigned-URL systems than I care to admit. You need to:

- Spin up a Lambda or API gateway to mint short-lived PUT URLs.

- Teach partners to chunk uploads with Multipart API calls.

- Handle retries, part numbers, and mysterious

EntityTooSmallerrors. - Glue on a UI that doesn’t look like 1997.

- Babysit bucket permissions so partners can upload but never list.

It works-until Marketing needs a drag-and-drop S3 uploader this afternoon.

Meet BeamUp: Your 5-Minute Upload Portal for S3

BeamUp turns that month-long PLC into a coffee-break setup:

- Direct-to-cloud - Files land straight in your bucket; BeamUp never stores customer data.

- Secret-less architecture - Least-privilege, short-lived creds; no long-term keys to leak.

- Branded portals - Set brand colours and a custom portal name; ship a polished receive-partner-files to S3 page in minutes.

- Unlimited size & type - If S3 can take it, BeamUp can hand it off.

- Zero-config multipart & Transfer Acceleration - We tweak the knobs, you just share the link.

- Roadmap-ready - Google Cloud Storage and Azure Blob support land later this year.

Mini Walkthrough

- Connect bucket - Paste your bucket ARN; BeamUp’s CloudFormation template provisions the least-privilege role.

- Customise portal - Pick your brand palette and portal name.

- Share link - Send a unique, password-protected URL to partners.

- Partners drag & drop - The upload portal S3 experience is pure browser → S3 with real-time progress.

Old Way 😩 vs. BeamUp Way 🚀

Old Way | BeamUp Way

┌───────────────────────────────────┐ | ┌────────────────────────────────┐

| Partner PC | 20 GB File | | | Partner PC | 20 GB File |

└──────┬────────────────────────────┘ | └──────┬─────────────────────────┘

| download | | direct upload

v | v

┌──────────────┐ re-upload ┌──────────────┐ | ┌──────────────┐

| Local Server |───────────► | Your S3 | | | Your S3 |

└──────────────┘ └──────────────┘ | └──────────────┘One transfer becomes none. Progress bars shrink by half.

Security Spotlight

“Does BeamUp see my data?”

Nope. BeamUp’s control plane issues short-lived session tokens; the browser uploads straight to your S3 bucket. File bytes never traverse BeamUp servers. Need an audit trail? Flip on S3 server-access logs or AWS CloudTrail in your account-BeamUp stays completely out of that path.

ROI Snapshot

Example: 50 files × 20 GB each, weekly

| Metric | Old Way | BeamUp |

|---|---|---|

| Total Data Moved | 4 000 GB (two hops) | 2 000 GB |

| Hours Waiting (50 MB/s link) | ≈ 22 h | ≈ 11 h |

| Duplicate Storage Seats | Dropbox × 5 ≈ $60/mo | 0 |

Multiply that across a quarter and BeamUp pays for itself long before Procurement finishes the memo.

Related Reading & Next Steps

- 📄 How it works: How to Receive Customer Files Directly in Your S3 Bucket

- 🌐 Multi-cloud: GCS and Azure Blob support arrive later this year-same portal, new back-ends.

FAQ

How big can a single upload be?

BeamUp hands off to native S3 multipart, so the hard limit is 5 TB per object-roomy enough for 8K ProRes masters.

Does BeamUp ever store my files?

Never. We proxy credentials only; file bytes bypass BeamUp entirely.

Can I keep using presigned URLs for edge cases?

Absolutely. BeamUp creates no lock-in; your bucket remains yours. Use whichever pattern fits the workflow.

Ready to Drop the Double Transfer?

Dragging a file shouldn’t drag your team. BeamUp delivers an enterprise-grade upload portal S3 experience in five minutes flat.

Ready to try BeamUp? Book a 15-minute demo

OR

Want to give it a spin? Sign Up Now